The Times is reporting today that the Royal Marines have purchased a new drone that can “distinguish between an enemy combatant and a friendly soldier”. This is probably journalistic license, but it sparked a conversation with Christian Braun about AI and distinction that naturally led to the issue of human reliance upon AI-generated knowledge for lethal decisions. Christian’s issue, which forms the jumping off point for this post, is:

My main concern is that the human assessment may at one point almost exclusively rely on AI-based information. Should the drone’s distinction between combatant/civilian inform targeting decisions? Especially in the heat of battle, it may be hard to question the drone’s analysis.

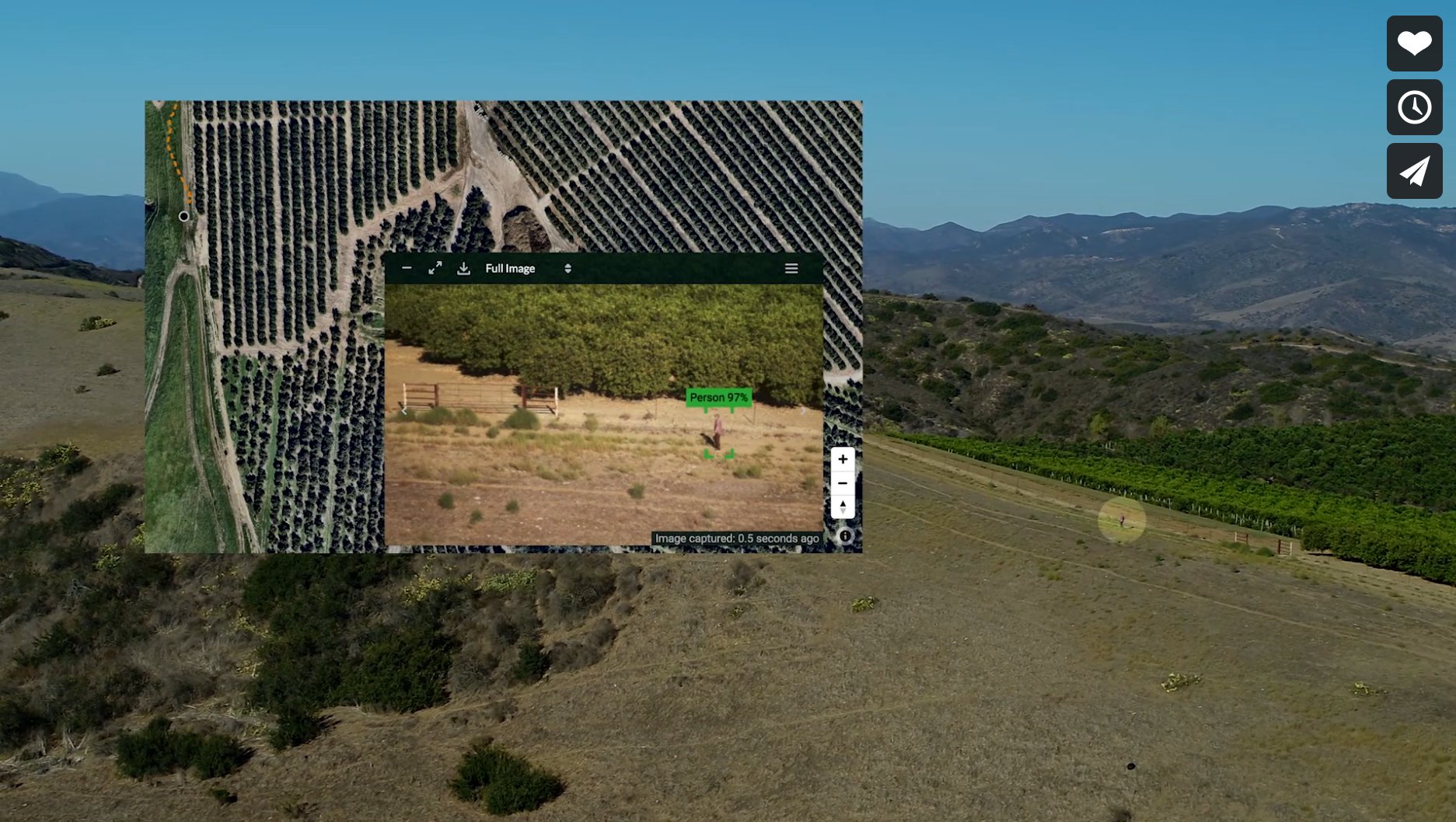

I am relaxed about militaries using tactical drones that pretty much fly themselves and autonomously flag persistent objects (cars, people, etc). I don’t think that this system, to judge from the manufacturer Anduril’s page, is about flagging people as combatants (a very hard if not impossible task for numerous reasons that lots of people have already written about), instead it is about a system that can flag up objects in an environment without the constant supervision of a human operator. Rather than have someone fly a drone, squint at the video feed, and try and figure out what’s going on, they send a drone “over there” and wait for the system to point out objects of interest as and when it detects them.

This skips the “what if the system tags someone wrongly as a combatant” objection to military AI, but leaves two of Christian’s points intact. A system that doesn’t tag people as combatants might still be relied upon by humans for information, and in the heat of battle, it might be hard to question a drone’s analysis. This second remaining point follows from the first, and therefore I’m going to concentrate on that first issue with military use of AI for the rest of this post: Is it a moral problem for humans to be almost exclusively reliant upon AI-generated information when making assessments related to the use of force?

The ethics of AI in war is essentially a subset of the ethics of information processing in war. AI is problematised for a variety of other reasons, but here the problem is that a non-human computer system might generate a set of facts about the world upon which a human being is dependent for their worldview. As such, if the machine gets things wrong, the human might form a worldview that is wrong (that person over there is a combatant, when they actually are not). We therefore enter a weird world in which human beings might do very wrong things (killing civilians) based upon facts generated by computer systems that we can’t interrogate in the same way we might ask a human being “What the hell were you thinking?” after something bad happens.

Let’s call this the poisoned fruit model, in that prior to computer systems generating facts and making decisions about facts we might rely upon other human beings for facts. AI is therefore something that can do a lot of information processing and fact generation, but its deployment introduces a “poison” of non-interrogability into an organisation, affecting everything downstream. Data fed into an autonomous system will be interpreted in a manner different from human cognition, and if you throw a rock in an AI Ethics conference you’ll likely hit someone working on problems this raises, like algorithm bias or explainable AI.

What I like about this model for understanding information processing in war is that it foregrounds the role of social knowledge generation that guides combatants in war. It echoes a way of thinking about the problem of distinction that I usually explain to my students as “the private Holmes problem”:

Private Holmes is deposited in the battlefield. Using his powers of detection and reasoning, he realises that he is faced with a moral dilemma, the parameters of which he knows to be true by virtue of facts that he has ascertained himself. Using his superior skills of moral reasoning, he is able to identify which of the two possible options is the right one to pick, and acts accordingly.

The point of this problem is to highlight that some basic features of how the morality of war is addressed smuggle in some very dodgy premises about decision-making in conflict. Specifically it permits you to think of individuals as reliant upon themselves for their understanding of a conflict, rather than fundamentally reliant upon others - and socially generated knowledge - with all the epistemic problems that entails. This, as I see it, is akin to the point that Joshua Rovner made about the disciplinary split between security studies and strategic studies: for security studies folks, war represents a failure of the system, for strategic studies folks, war is a problem to be managed. In this case, there’s people who like to approach the ethics of war with a view to determining underlying principles of good conduct, to whom epistemic uncertainty is background noise, and then there’s people who think that any theory of right conduct in war that isn’t premised on the fact that no-one can really know anything for certain during armed conflict is missing the point entirely.

Acknowledging the centrality of social knowledge in war to human decisions means inverting our opinion of decision-makers. We move from the god-like operator of the switch to stop a speeding trolley to average people surfing on a sea of ignorance trying their best to not do something horrificly wrong. After all, human beings are always reliant upon chains of truth and facts that they cannot chase (at least, unless they hope to achieve more than get out of bed each morning). In this sense, moral decisionmaking is coping with the fact that you’ll always be uncertain, even when the consequences are lethal. Recognising that war, like intelligence, involves adversarial epistemology, where the other side is always trying to mess up your understanding of the world, doubles down on this.

But back to the poisoned fruit of AI-generated facts. I am “relaxed” about the prospect of human reliance upon AI because this reliance occurs within a wider field of social action in which participants are always reliant upon information and knowledge that they cannot personally double-check. Consider the role of persons delivering indirect fire based upon information provided by artillery-spotters. They may have all the facts at their command, but at the end of the day, they are likely situated in a position where they cannot independently verify many of the most important ones (like, “Are there any civilians nearby?”). In these situations, human agency in some forms of military operation may be likened to the Chinese-room argument - we might say that the individual appears to be exercising free-agency, but are they really what we normally consider to be a free-agent?

Second, any human action that is in theory dependent upon AI-generated facts occurs in the wider context of human generated facts and knowledge. While it might be theoretically possible that humans might one day become dependent upon AI systems to determine whether they pull a trigger or release a bomb, I think these systems would be integrated within militaries much in the same way that sensor systems have been. Processes, like “kill boxes” are, in this sense, the better unit of analysis. Is a computer system that calculates the originating point of enemy artillery fire so that near-instant counter-battery fire can take place that much different to an AI-spotter system recognising an enemy artillery battery? If we’re looking to erase human reliance upon facts generated by computer systems that we can’t second-guess, then we have to wind the clock back in a huge number of military domains. In this case, what the poison-fruit case points to is the need to consider the impact of AI on the already-existing synthesis of human-generated and machine-generated facts that enable action in modern warfare.

My own view is that a lot of the military AI-worries amount to what Lee Vinsel has termed “criti-hype” - “criticism that both feeds and feeds on hype”. Worrying that humans may one day entirely depend upon AI for lethal decision-making is premised upon the entire displacement of humans within military organisations writ large. It requires a world where none of the social knowledge currently generated by military organisations, nor the hard-to-AI-ify tacit knowledge that helps generate it, matters anymore. Taking “human facts only” as a baseline similarly erases the fact that combatants regularly rely upon computer systems to tell them stuff that they have no means of independently verifying. My own bet is that recognition systems will allow AI-systems to improve positive-identification of a greater range of objects, but it is unlikely to get to the point where it can positively identify persons as combatants independent of other, human, cognitive activity. Bad news for recognisable bits of military kit like tanks in cluttered environments, but good news for humans with careers in targeting.

#LAWS #Artificial Intelligence #Distinction #Just War Theory